After three weeks of intensive learning and building a solid understanding of data engineering fundamentals, the final week focused on developing a project inspired by the Amplitude data pipeline. The challenge involved designing and implementing a pipeline to ingest and process Mailchimp data within a four-day timeframe. The work required demonstrating key stages of a modern data workflow—Extract, Load, Transform, and Orchestration—completed in any order that best suited the development process. The project also required writing a short blog entry each day to document progress, capture ideas, and outline possible next steps.

Laying the Foundations of the Pipeline

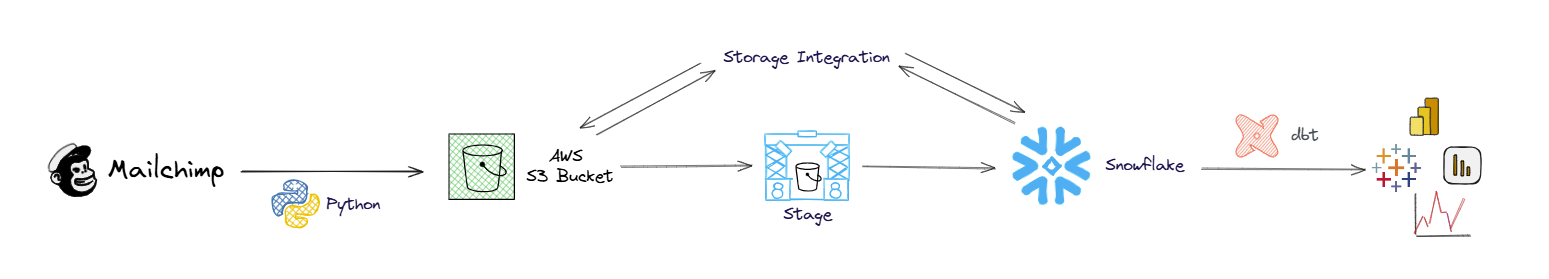

Before any API calls were made, the overall pipeline design was planned from end to end to ensure a smooth flow of data. The goal was to move Mailchimp data into a reliable analytics environment, starting with extraction and ending with clean, well-modelled tables ready for reporting.

The first step was to choose the core tools and prepare the environments. A Python script was selected to call the Mailchimp API, extract the data, and load it into an Amazon S3 bucket as the raw landing zone. This setup required creating a GitHub repository for version control and an S3 bucket configured with the appropriate keys and policies to ensure data security. Because the GitHub repository folder structure would be created using the dbt project, this was set up next. The dbt project was created and connected to both the GitHub repository and the Snowflake database, allowing changes to be tracked and deployed in a structured way.

The S3 bucket was then configured to connect to a Snowflake database, enabling the data to be moved into cloud storage and queried efficiently. This stage of the setup involved creating storage integration, a pipeline, and a stage in Snowflake to handle the incoming files. The database was linked to the dbt project to handle transformation and modelling.

Mailchimp

The Mailchimp API is a set of programming tools that allows developers to interact with Mailchimp’s platform directly. It utilises RESTful principles, enabling applications to access and manage data, such as audience lists, email campaigns, and automations, programmatically. With the API, it’s possible to add or update subscribers, create and send email campaigns, automate workflows, and retrieve performance data, all without using Mailchimp’s web interface.

For this project, the Mailchimp API was called specifically to extract data about email campaigns. This involved retrieving details such as campaign metadata, send dates, and performance metrics, including opens, clicks, and other engagement statistics. To handle large data volumes, pagination was used to fetch campaigns in batches. The extraction process included both historical data - retrieving all past campaigns up to a specified cutoff date - and incremental updates, which pulled only campaigns created within specific recent timeframes. The extracted campaign data was saved in JSON format for subsequent processing and transformation steps in the pipeline.

Extract & Load

After setting up the environments and reviewing the Mailchimp API documentation, the remaining time was dedicated to planning the data extraction strategy. A concise Python script was developed to call the Mailchimp campaigns endpoint, attaching the extraction timestamp to each JSON response. Instead of pushing data to the cloud immediately, the script saved the JSON files locally while logging both informational messages and any errors encountered during execution. This approach facilitated testing and debugging before integrating with the full pipeline. The campaigns endpoint provided detailed information on the email campaigns, allowing for targeted data extraction and future transformation steps.

mailchimp = Client()

mailchimp.set_config({

"api_key": api_key,

"server": server_prefix

})

# print(f"Saved to: {filepath}")

logging.info("Environment Setup: calling api")

api_check = mailchimp.ping.get()

response = mailchimp.campaigns.list()

# create filename with extract time

filename = f"mailchimp_campaigns_{extract_time}.json"

filepath = os.path.join(base_path, filename)

if api_check == {"health_status": "Everything's Chimpy!"}:

# log successful api call

logging.info('Successful API call')

with open(filepath, "w", encoding="utf-8") as f:

json.dump(response, f, indent=4)

logging.info(f"Saved to: {filepath}")

else:

logging.error(f"Unsuccessful API call! Error {response.status_code}: {response.text}")

Some Final Thoughts & Next Steps

The next steps will focus on refining the current script to support pagination, enabling it to retrieve all campaign data in manageable chunks rather than a single large response. This involves looping through API calls using offset and count parameters until all campaigns have been fetched, ensuring no data is missed. To avoid duplicate data in S3, the strategy could include using unique campaign identifiers along with extraction timestamps to overwrite older files or maintain incremental updates based on the last extraction time. The script will also be refactored into modular functions—for example, one for paginated campaign retrieval and another for uploading JSON files to S3. Breaking the code into functions makes it easier to orchestrate the workflow in tools like Kestra.

After the data lands in S3, Snowflake’s stage and pipeline will ingest it, making it available in dbt. Within dbt, the data will be transformed following the medallion architecture, structuring it into bronze (staging), silver (intermediate), and gold (marts) layers. These layers will be built in Snowflake to support downstream reporting and visualisation, ensuring the data is easy to explore and use.